5 min read

MemoryBank: Enhancing Large Language Models with Long-Term Memory

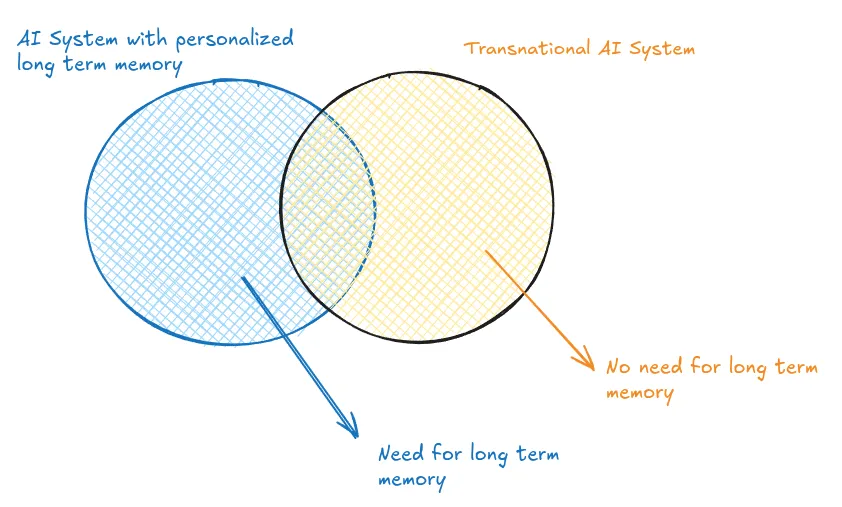

LLM still have a deficiency of long term memory.

“

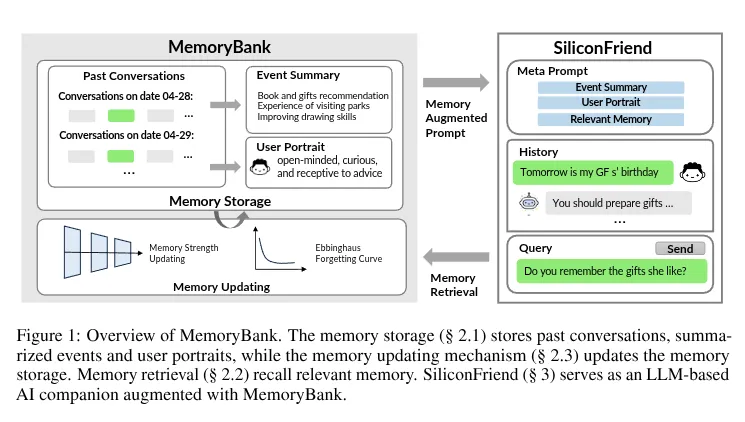

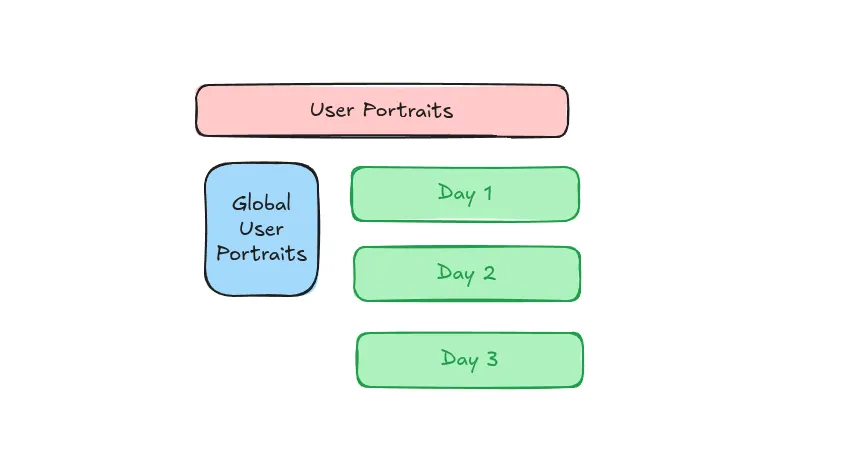

MemoryBank enables the models to summon relevant memories, continually evolve through

continuous memory updates, comprehend, and adapt to a user’s personality over time by synthesizing information from previous interactions.

“

Long-term memory in AI is vital to maintain contextual understanding, ensure meaningful interactions and understand user behaviors over time.

“

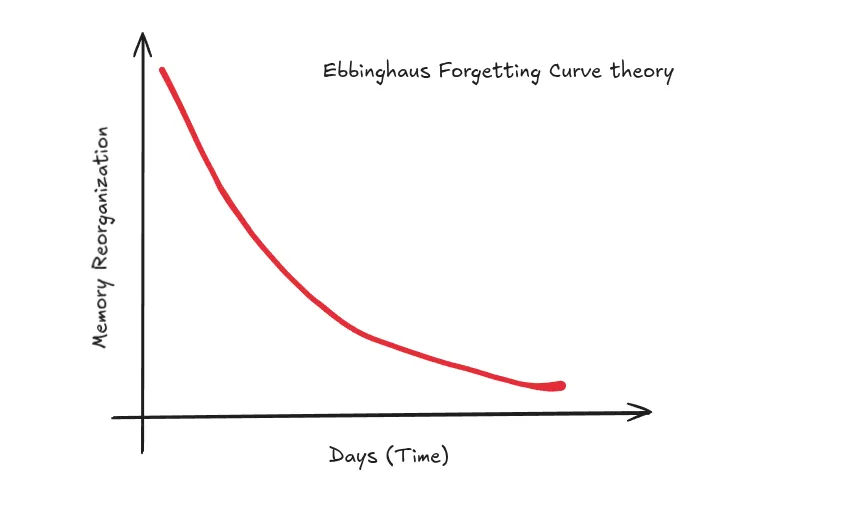

MemoryBank further incorporates a dynamic memory mechanism closely mirroring human cognitive process. This mechanism empowers the AI to remember, selectively forget, and strengthen memories based on time elapsed, offering more natural and engaging user experience.

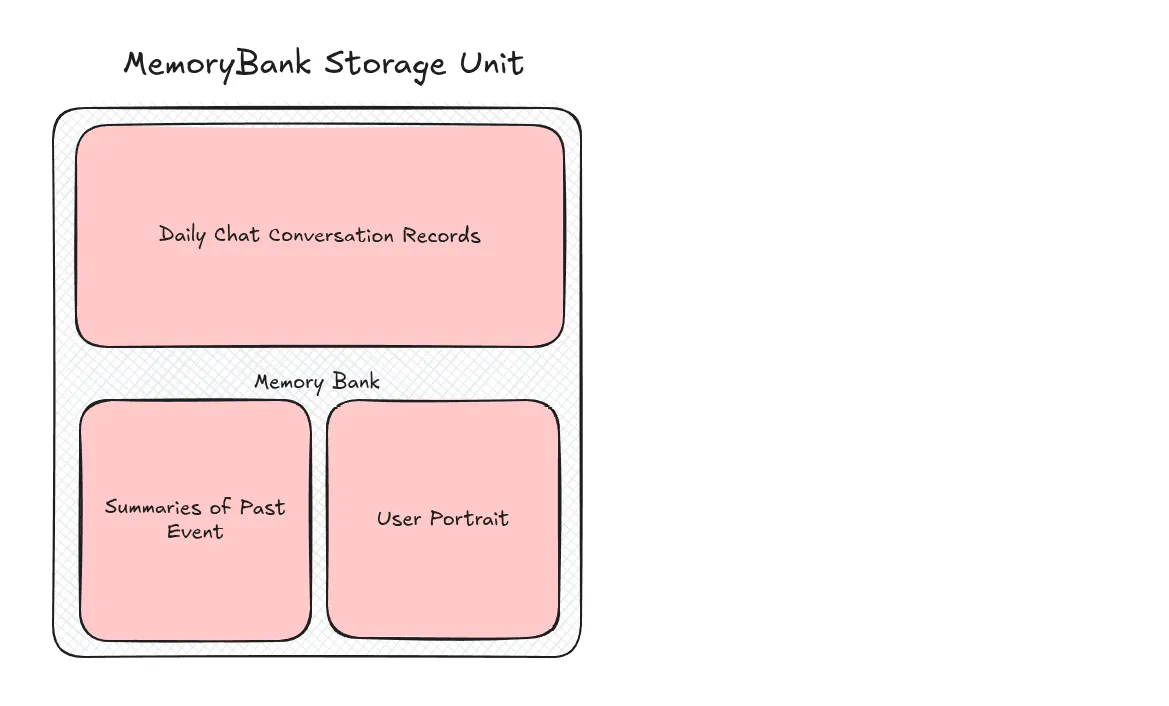

Architecture

“

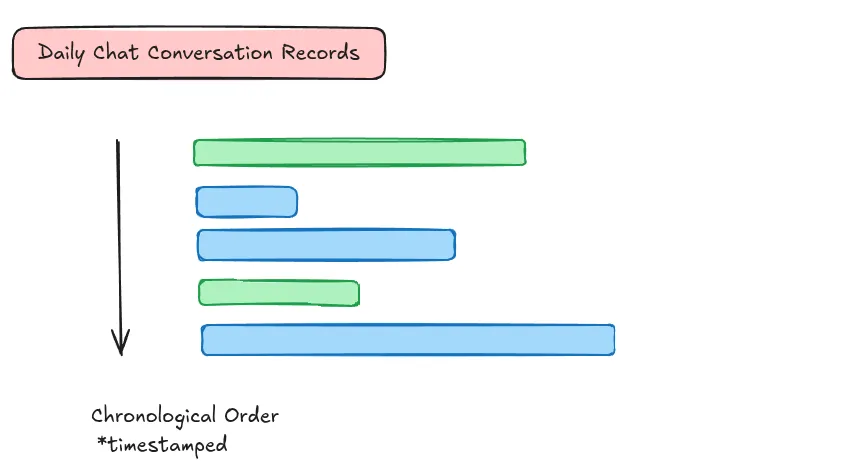

This detailed record not only aids in precise memory retrieval but also facilitates the memory updating process afterwards, offering a detailed index of conversational history.

“

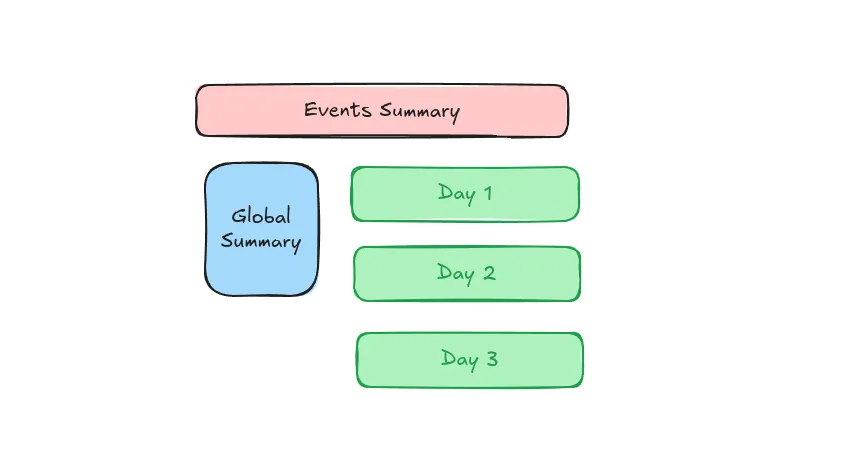

This process results in a hierarchical memory structure, providing a bird’s eye view of past

interactions and significant events.

Retriever

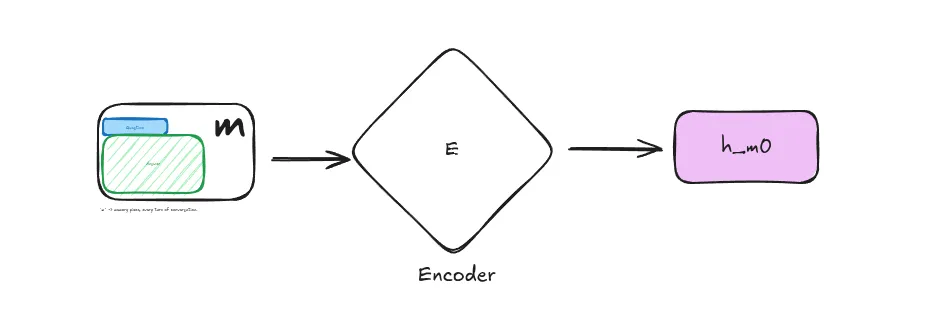

Using Dense Passage Retrieva

h_m is the vector representation of memory piece (m)

And entire memory storage (M) is hm0 + hm1 + hm2 + hm3 + ….

These vector embedding are then indexed using FAISS for fast retrival.

For current chat session, let the current context of conversation, c and its vector embedding be hc, then it is queried in FAISS for most relevant memory.

memory updating mechanism

“

for scenarios that expect more anthropopathic memory behavior, memory updating is needed

Plus, forgetting less needed memory can make the system much more effective.

Retention Rate (R) = e^(-t/S)

What fraction of memory is retained

t is time

S is length of memory

e is 2.7.18