5 min read

Notes on “Go Faster: Tuning the Go Runtime for Latency and Throughput by Paweł Obrępalski”

Original Video: https://www.youtube.com/watch?v=GPEQg6rchh4

Pre Req: https://golab.io/talks/understanding-the-go-runtime

Understanding the Go runtime before turning it is important.

”

The Go runtime is an essential part of every single Go compiled binary. Understanding what it does for us and the price we pay for it can be interesting.

❤️ these slides https://www.datocms-assets.com/95170/1712916017-espino_2023.pdf

What is Go Runtime ?

We have a go code:

// main.go

package main

import "fmt"

func main() {

fmt.Println("Hello World")

}We compile it:

go build main.goWe got a OS (platform) dependent binary, if CGO_ENABLED=1 then its dynamically linked to C libraries (libc). If not then its statically linked.

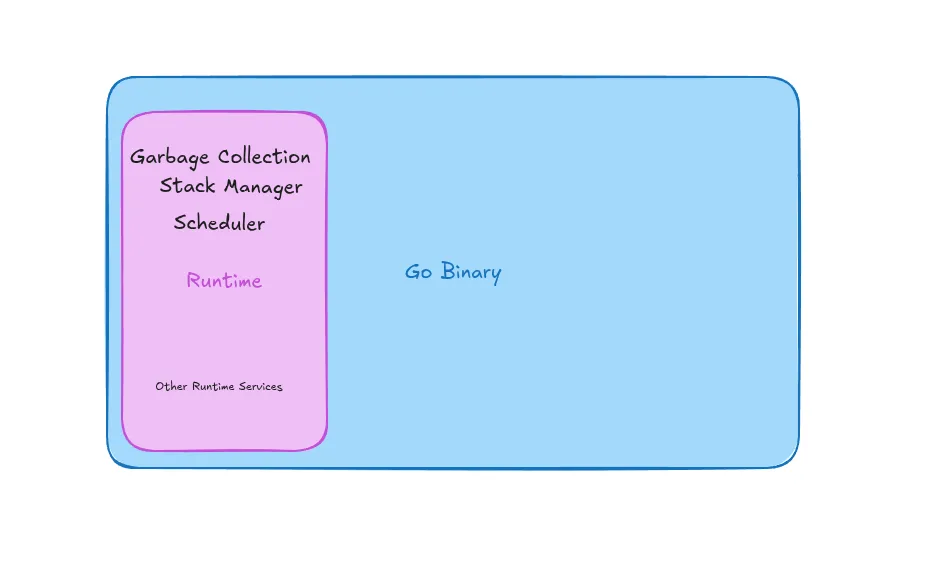

The Go runtime is the part of that executable that manages several crucial tasks at execution time.

“…and the runtime, a big chunk of existing code compiled with our code”

The author’s focus is on “Large Scale Recommendation System”, we have to keep this in mind while watching the talk.

Table of content:

Go Runtime

- Scheduler

- Garbage Collection

Observability

- Metrics

- Profiles

- Runtime Tuning

The Go Runtime Benefits

- Automatic Memory Management

- Cross Platform

- Easy concurrency - millions of go routines.

Consumes ~2MB of extra binary size

Long startup time. (not that long in practice, but has long process before main is executed)

Garbage collection overhead, (1 , 2 % of CPU can to to any limit depending on the application). Go’s new greenteagc has shown promising results for low overhead (relative).

Tuning the Runtime

The default behavior is very good for most of the case.

Parts of Runtime

The Scheduler

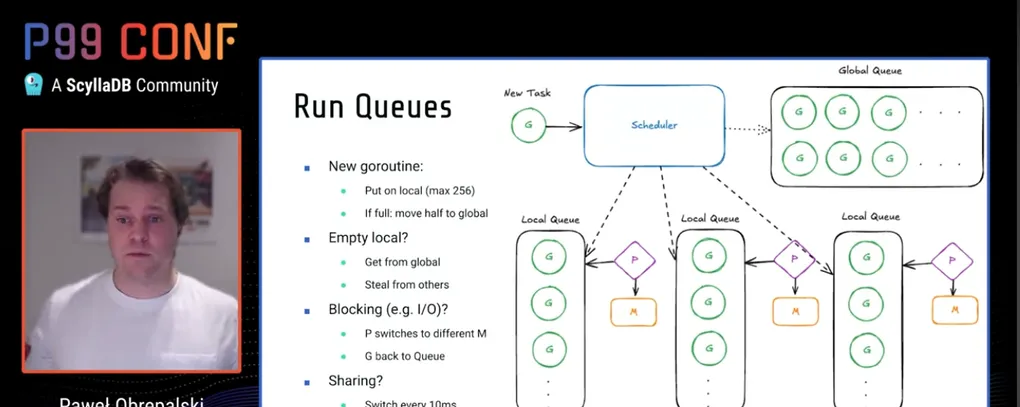

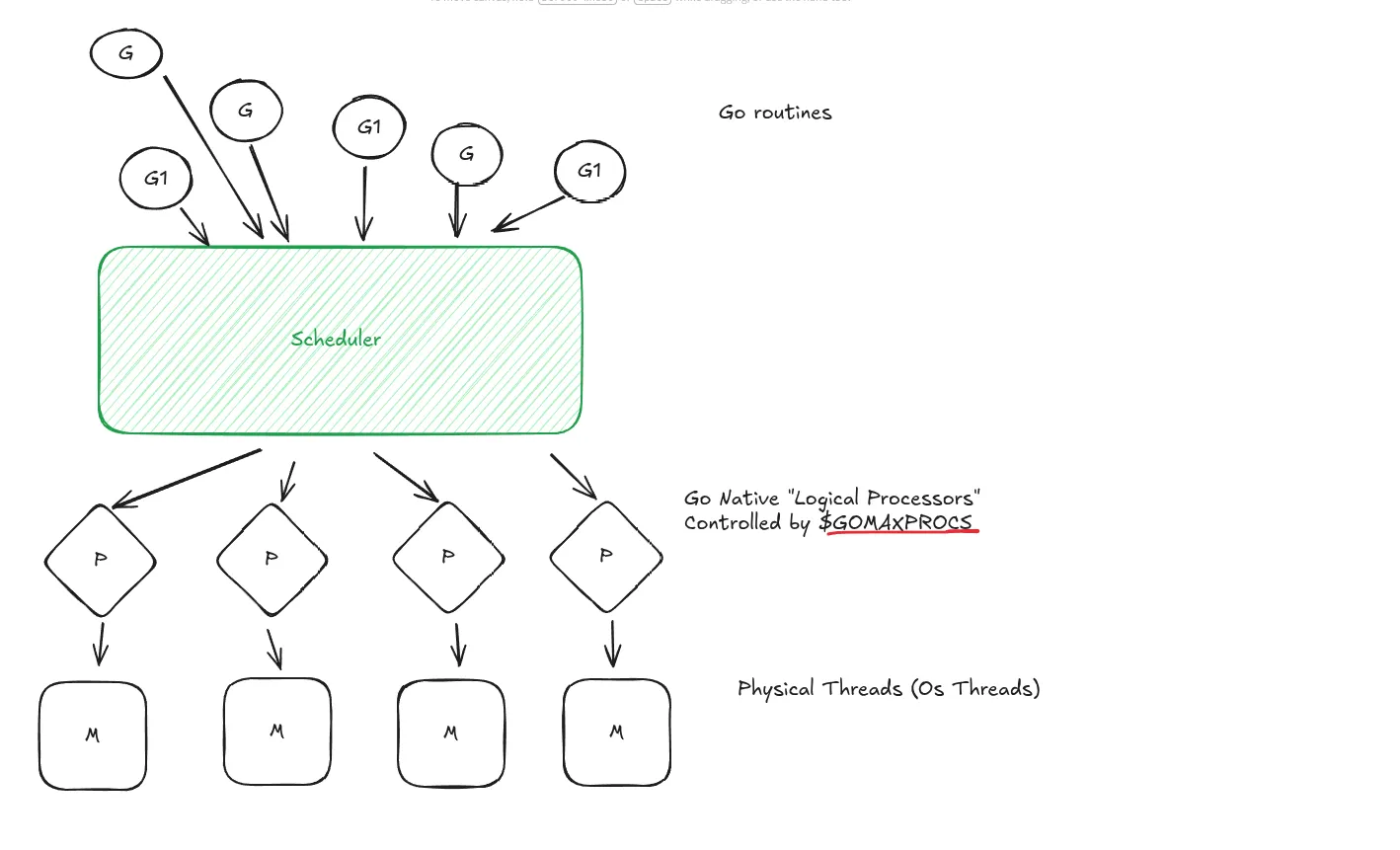

G-M-P Model

- Go Routines

- M (Os Threads)

- P (Logical Processors)

Is the job multiplexer, it takes go routines assign then to a logical processor (P) which get executed (passed) to os threads.

The scheduler has a global Queue, which is used to store

Total Go Routines - (num(P) * 256)i.e Each logical Processor has a queue of 256 length, if all are filled then its placed in Global Queue.

When a local queue (local to P) is empty, then it can either get new go routine from global queue or from other local Processors queue (stealing).

The processor in its quque assigned the current go routine to the os thread.

r1 <- P[0].Queue.Pop()

M1 <- r1When the r1 is IO blocking ,then processor starts processing / assigning other go routines to other available os threads (M).

Garbage Collector

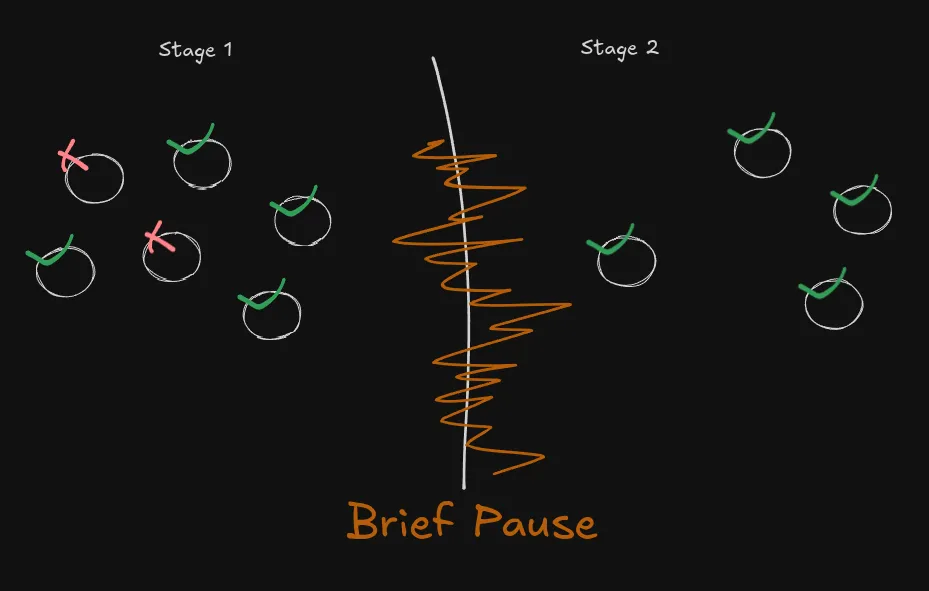

Using Mark & Sweep

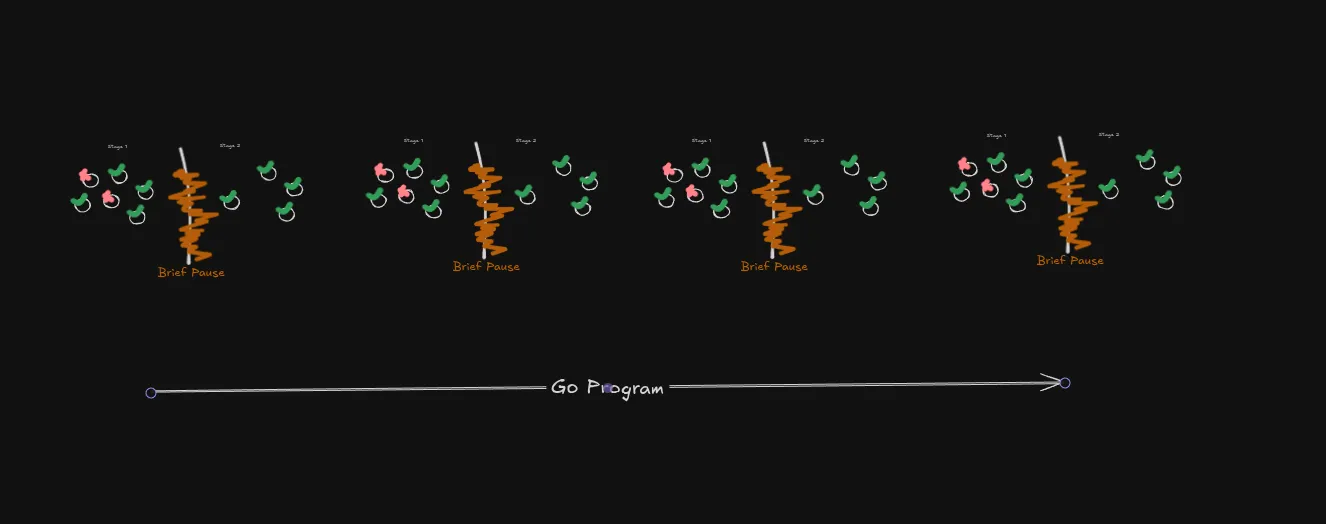

This is one cycle of Mark and Sweep, the GC is running along side the application and have multiple workers for each stages in time internal.

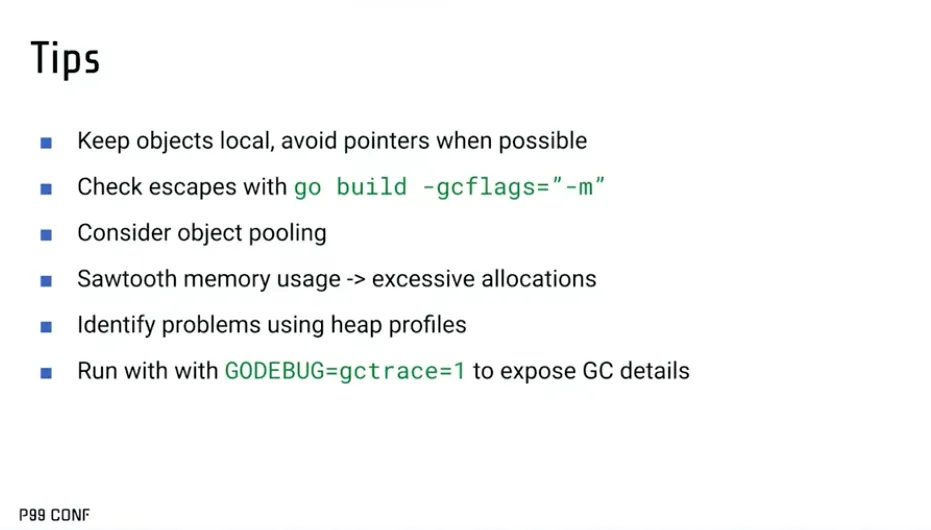

The GC can be tuned with GOGC or GOMEMLIMIT.

Observability

- Application

rps/latencyvia Prometheus. - Via Go

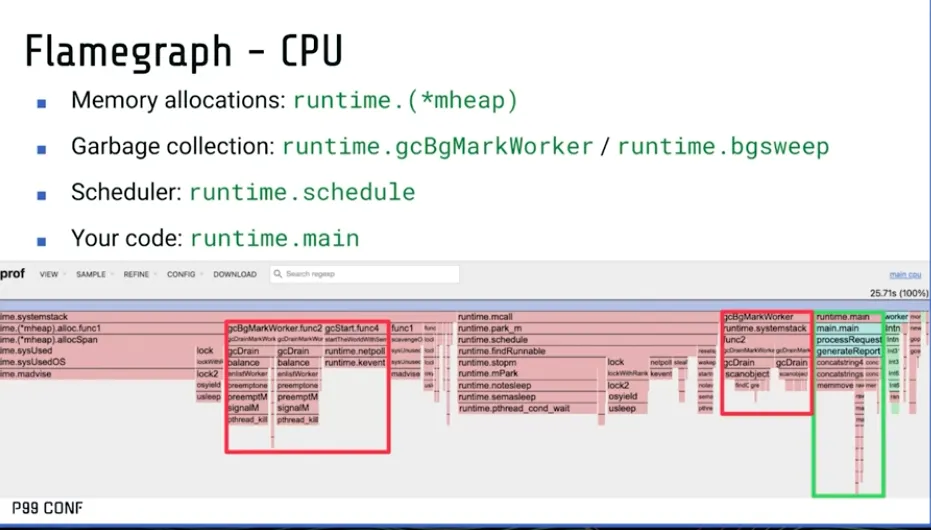

FlightRecorderhttps://go.dev/blog/flight-recorder - Via go CPU / Memory / Allocations / Mutex / Go Routines

profiles/

Runtime Tuning:

Before go 1.25 the value of GOMAXPROCS is default to number of OS Threads of Host. but when containers are build and deployed, the actual containers has much smaller thread size (let say 2, 4) then host, but using the oversized value of GOMAXPROXY causes delay in scheduling and can cause increase latency.

Past way we set this value manually but with go 1.25 it limits the value of the container not the host.

GOGC Value

It controls GC frequency

The value we specify is used to construct the target heap size, exceeding which the GC cycle is executed.

Target Heap Memory = Live Memory * (1 + GOGC/100)Here

Higher GOGC value -> Higher Target Heap Memory -> Less Frequent GC cycle -> Lower CPU usage -> Higher Memory UsageWe can have a cap on the Target Heap Memory to trigger GC run, using GOMEMLIMIT value, then the heap memory exceeds this, the GC cycle is executed.

Combining both GOGC and GOMEMLIMIT we can have a runtime tuned system where we run GC less often but prevent OOMs by memory limit exceeding.

Go’s new greenteagc in >1.15 is seeing 10-40% reducing in CPU overheads.

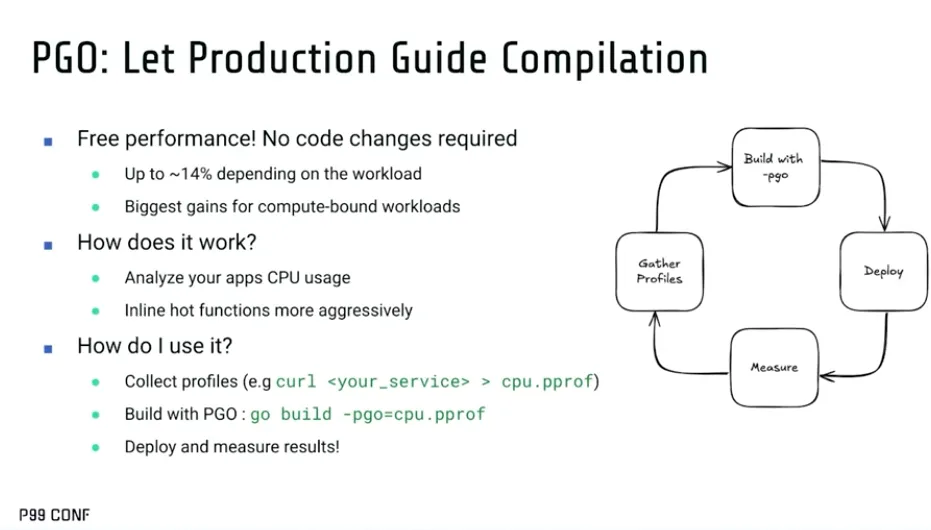

PGO

References: